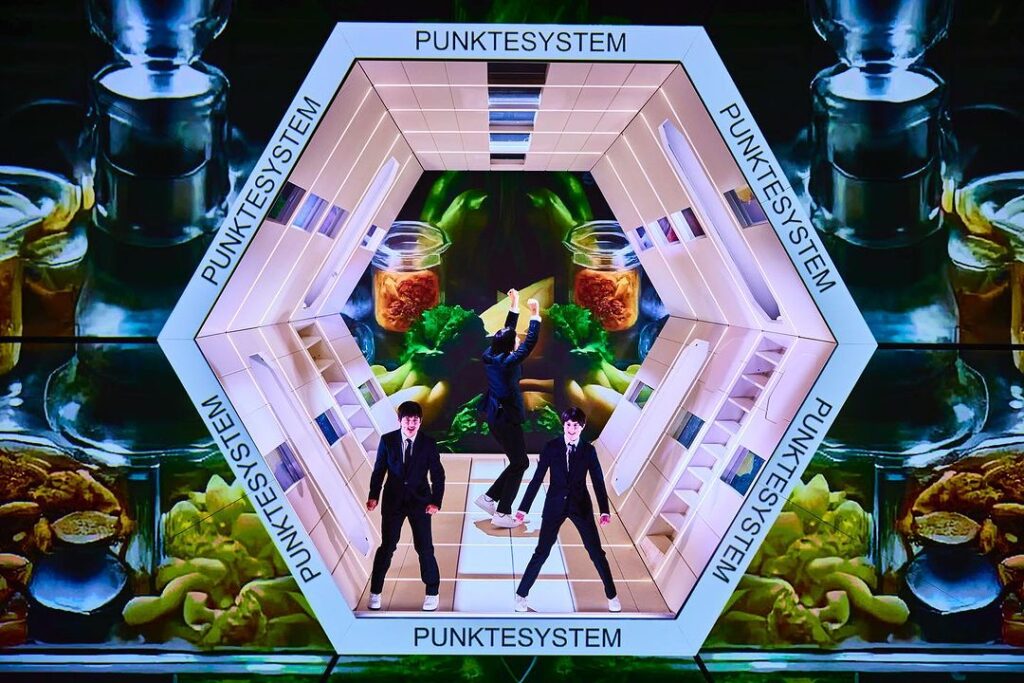

For the theatre play Remote Code Executions (RCE), based on the book by Sibylle Berg, directed by Kay Voges, I contributed AI based visuals.

Together with 9 other digital artists, I was tasked to come up with visuals for different sections of the play. I devised a pipeline with TouchDesigner and a special tox by DotSimulate that allowed me to use StreamDiffusion, an extremely fast version of StableDiffusion, within TD.

The pipeline looked like this:

The entire text of the play was delivered as an audio-file, spoken by an AI voice, cut together by Kay Voges.

- Transcribe this audio-file via youtube

- Let youtube translate it to English

- Use the time coded transcription to prompt StreamDiffusion

- Generate an image-stream that is constantly changing based on the transcription prompts, based on an audio-reactive visual that itself is being driven by the audio-file

This allowed me to generate a ton of material really, really fast.

Here you can see the output of my process:

- Left: The Visual and the Transcription + Translation

- Right: The generated AI-Stream

Using StreamDiffusion, I defamiliarized some videos that were delivered to me, which resulted in some rapid, abstract visuals that were used throughout the piece.

Then I generated some AI-Zooms that generated images based on a feedback technic. This led to some very trippy, computer dream visuals. Those took the computer the longest. In one case, I let the computer run for 3 days.

Lastly, I created a visual that I wanted to do for a long time:

I let the computer detect patterns, shapes, objects, and animals in clouds. I used the media pipe pipeline in TouchDesigner to let the computer tell me what it sees when looking at clouds.

Picture Credits: Moritz Haase || Marcel Urlaub

Videodesign, Montage:

Digital Artists:

- Voxi Bärenklau

- Andrea Familari

- Max Hammel

- Michael Klein

- Arne Körner

- Julius Pösselt

- Max Schweder

- Mario Simon

- Jan Isaak Voges

- Robi Voigt

Regie:

Bühne:

- Daniel Roskamp

Kostüme:

Musik: